(You probably have inferred what this whole blog post is about by now!)

Arguments against AI Art are plenty. Here’s some:

- AI Art is visually unappealing.

- AI Art will take away jobs from human artists.

- AI Art plagiarizes the work of human artists.

Argument 1 is an argument against AI Art at this point in its technological development. Yes, a lot of it is quite ugly, but an argument against the now is not an argument against the future.

Argument 2 I do broadly agree with. AI Art, as many in power envision it, is merely another tool of automation that allows for the creation of goods without the necessity of human labor. In particular, the ability for AI models to output artwork at scale is a great and justifiable cause for concern.

However, this is an argument against the centralized control of the technology, it is not an argument against the technology itself. If an independent game developer who otherwise can’t afford to hire artists uses AI to generate art assets to build their dream game, have they done something wrong? If the argument is that they shouldn’t make the game if they can’t afford to hire people to help them make it, then that seems like a very capitalist argument to me. Here, we have a tool that allows them to make art without the constraint of a precarious financial position. Yes AI Art right now absolutely devalues artists’ labour, and people who can afford to hire artists absolutely should, but the flip side of this is that those who cannot afford to do so have an alternative that isn’t just ‘use an art style that requires no skill’.

We should not mistake the arguments against how a technology is used for arguments against the technology itself, especially if there are other possible uses of said technology. The US Government once tried to staunchly regulate encryption as it could be used by terrorists or criminals, and yes it absolutely could, but the technology itself is neutral and could be used by pretty much anyone. By reading this blog you’re probably using encryption right now (my domain parallelsuns.com is HTTPS enabled).

In such scenarios, it is argument 3 that is typically trotted out. AI Models are trained on vast data sets of art created by human artists who typically did not consent to such use of their art, therefore when outputting new art they are clearly plagiarizing the artists whose work was used to train them. In our aforementioned game developer scenario that developer would still be doing something wrong because they have likely used an AI model trained on human artists who did not allow for such a use of their art and as such, the output of that AI model has plagiarized their work.

The typical counterargument is that this process of training an AI model with existing human-created art bears similarities to that of a human artist being inspired by other human artists and creating their own work using existing art as inspiration or reference.

To my intuition, this argument seems sound to me.

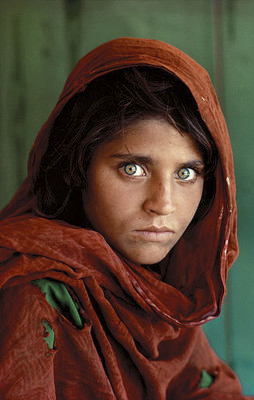

Sure, these Midjourney outputs for ‘Afghan Girl‘ bear very obvious similarities to Steve McCurry’s Afghan Girl:

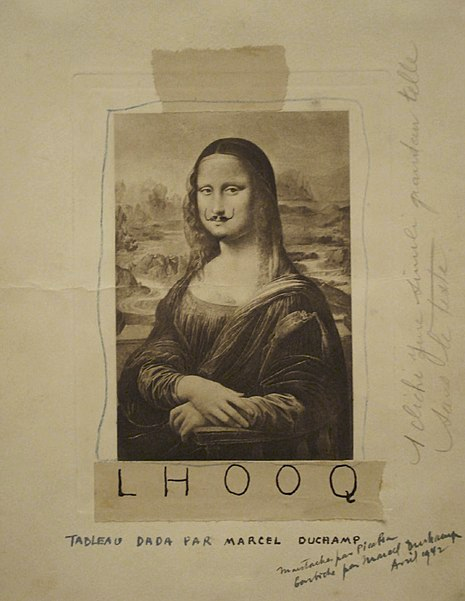

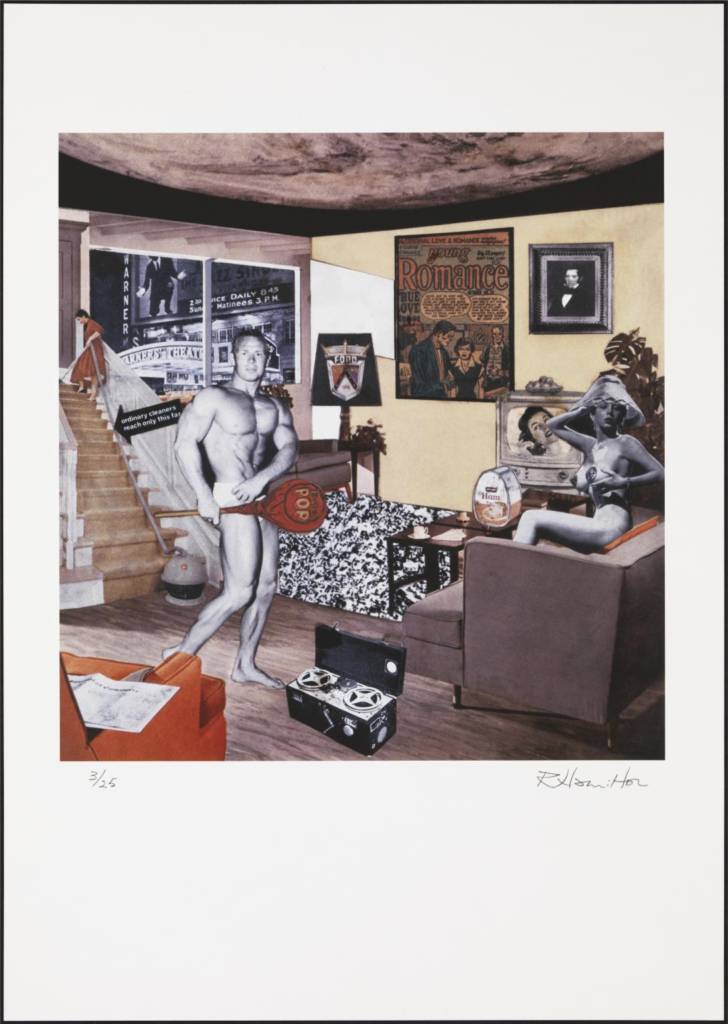

In the Afghan Girl example, most people would consider the Midjourney outputs to be plagiarism, and I would probably agree. Yet there are many examples of artwork like the aforementioned L.H.O.O.Q or Just what is it that makes today’s homes so different, so appealing? that explicitly and directly ‘sample’ existing artwork, but yet are recognized as ‘original’ works of art (maybe you don’t but a lot of people do and you’d have to at least admit that ‘art’ and ‘originality’ are largely socially constructed ideas so what people en masse think does matter).

What are the criteria necessary for originality? Is the intent of the artist the defining aspect? What about Jackson Pollock’s paintings, which are made instinctively and with little conscious intention?

One could conceivably develop a robot that painted ‘stochastically’ and arrive at similar results. Yet Pollock’s work is very much considered unique art entirely of his own making, despite the lack of overt human ‘intention’.

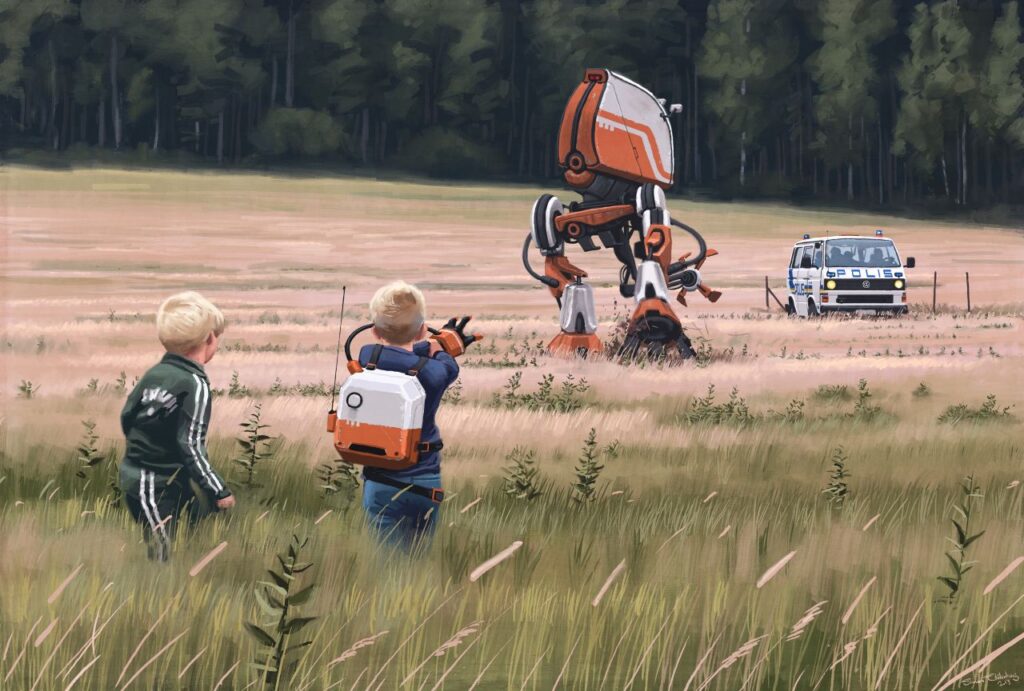

Is it the act of ‘remixing’, of blending together old elements to make something new that makes it original? If collage art like Just what is it that makes today’s homes so different, so appealing? can be considered original artwork, then why not the output of an AI model? Consider AI artwork like this, by Varun Gupta, which I find quite striking and original:

The AI model likely generated this output based on training data which included portrait photography from India and Pakistan, alongside photos of ‘robots’ from movies, TV shows, etc.

One could argue this is more ‘original’ than Just what is it that makes today’s homes so different, so appealing?, as this AI artwork does not directly sample existing work. Sure, the AI model is trained on art from artists who did not consent, but da Vinci did not consent to Duchamp’s use of the Mona Lisa either, nor did the creators of the ads and artwork used in Just what is it that makes today’s homes so different, so appealing?.

Fundamentally, it seems to me that the distinction between ‘inspiration’ and ‘plagiarism’ is deeply subjective, and where you draw the line differs depending on the individual (not to mention the distinction between ethical standards and legal standards of plagiarism). What I have not been able to identify however, is a consistent standard for originality that would include all of the human artwork we already consider original, while excluding all AI art.

My own intuitive standard for originality would probably hinge heavily on the question: was there an intent to plagiarize? In the showcased examples of human and AI art which would broadly be considered plagiarism (The Dafen replicas and Midjourney’s Afghan Girl), there is clearly an intent to plagiarize, but in the case of Afghan Girl, it is the human prompter who intends to plagiarize. Midjourney simply fulfilled the request. If asked to generate more ‘original’ art, Midjourney would similarly fulfill that request.)

In her excellent blog post, Karla Ortiz argues from a different position. Rather than argue based on the output of the AI models, she argues that intrinsically, AI models cannot possibly be ‘inspired’ by existing art, but by definition plagiarize it instead. She argues that the distinction between AI models and human artists is that:

“Artists bring their own technical knowledge, problem solving, experience, thoughts and lives into each artwork.”

I’m still not quite certain this is a satisfactory distinction. After all, what ‘technical knowledge’ was leveraged when Duchamp made L.H.O.O.Q? Let’s assume that the bare essential understanding of knowing how to use a pen qualifies as ‘technical knowledge’. Does a ‘prompt artist’ then not bring in ‘technical knowledge’ when they craft a descriptive prompt to generate a particularly vivid piece of art? Consider the AI outputs of the primitive Stålenhag-based prompts that I wrote above. I’m really not an expert in writing AI prompts and as such, their outputs are hardly vivid or realistic pieces of art.

What about if a human prompter wrote their AI art prompt with a great deal of heart, bringing in their experience, thoughts and life, to generate an artwork that they otherwise may not have been able to execute (maybe they lack the skill or can’t afford a drawing tablet or have an injury or disability)? Does the human’s clarity of intention and sincerity not affect the written prompt, and by extension the final art?

That’s something that I feel Ortiz’s argument does not factor in. All AI art models right now require a human prompter. This prompt may only be a paragraph of text, but there’s no denying that it has tremendous impact on the final output of the AI model. Does this human prompter not import the requisite technical knowledge, problem solving, experience, etc for the final art piece to be considered original art?

For the sake of argument, assume we are talking about the AI model only and that we consider the human prompter to be irrelevant in this argument. In Ortiz’s definition of inspiration, the AI model isn’t inspired, but by that very same definition, a lot of human art might not be either.

Jackson Pollock has said of his painting process, “When I am in my painting, I’m not aware of what I’m doing” (My Painting, 1947). There is a reason his art is associated with the Automatic Art movement. It’s art that’s made instinctively and unconsciously. Did Pollock bring in his thoughts and his life and his experience into his art? What about dancers who might dance without conscious thought in an instinctual or trance-like state?

In fact, the argument that Ortiz is making is hardly new! Art critic Robert Coates made a similar argument of Pollock’s art, “mere unorganized explosions of random energy, and therefore meaningless“. This is in effect the same argument Ortiz is making. Coates is implying that because there is no conscious thought and intent behind Pollock’s art, it is inherently meaningless as art, or perhaps not even worthy of being called art. Ortiz uses the same logic to imply that AI models can only plagiarize.

If you believe in this argument, that without intent or experience or technical skill, AI models can only plagiarize and cannot make original art, then it seems to me by extension you must also argue that the work of artists like Pollock or Duchamp or Warhol are not original art. You would be dismissing the work of some human artists in an attempt to protect all human artists. Again, maybe you’re okay with that, maybe you think modern art is a whole lot of bullcrap, but a lot of people disagree and if art is a social phenomena then their opinions have to matter too!

None of this should be taken to imply that all AI art is good and original and legitimate or that human artists should not be afraid of AI art. AI Art is easily scalable and as a consequence a lot of it is quite ugly and a lot of it might end up taking jobs from human artists.

However, these are conditions that emerge from the current legal and economic paradigm, where most AI models are controlled by singular corporations and trained on large unfiltered data sets. AI models themselves and the process of developing and training them aren’t intrinsically evil. Consider the work of artists like Holly Herndon, who uses AI to enhance her work. She has developed an AI voice model, Holly+, trained on her own voice. Here is Holly Herndon and Holly+ covering Dolly Parton’s Jolene:

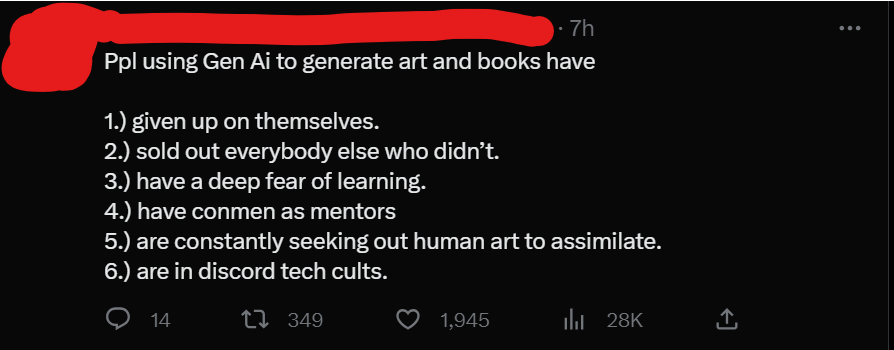

Look, a lot of this blog post is just incessant navel-gazing about modern art and originality but I think the underlying argument that I’m trying to put forth here, that you don’t actually hate AI, you actually hate capitalism, is an important nuance. Maybe you already know all of this. Maybe you already agree with me and think all of the above is obvious. Cool! But there are a lot of people it seems who do think AI is inherently bad:

I think complete dismissal of AI is a mistake, and we can criticize its current uses while envisioning new paradigms where artists work together with ethically trained AI models to enhance their own work, but this isn’t what I see happening. Instead, the knee-jerk reaction is to boycott artists who work with AI without consideration as to what AI model was used or how the AI model is used. ‘AI’ becomes a scare quote in essence. There are artists who use AI in a way that many would see as largely ethical who are going to feel demonized by this attitude (same thing happened with artists who worked with NFTs). I think this just creates divisions where there need not be any.

Imagine if, upon noticing the fire that the gods kept in their possession, Prometheus chose to call fire a scam or perhaps even ventured to extinguish it. I think that would be a waste. Why not consider ways to develop ethical AI models, released as open source and trained on public domain or opt-in data sets? Why not develop an open standard to embed opt-in/opt-out metadata into artwork? Why not develop legal frameworks for revenue sharing when AI art is used? Why not wrest control of this technology from large corporations?

Why not steal fire from the gods?

P.S. Here are 2 resources that have resonated with me and informed my perspective on this issue:

1. A brilliant twitter thread that I think really drills down to the underlying anxieties among artists: https://twitter.com/egoraptor/status/1631717867421933583

2. An excellent essay about AI and capitalism by Ted Chiang: https://www.newyorker.com/science/annals-of-artificial-intelligence/will-ai-become-the-new-mckinse